Identity Theft in the Era of Advanced AI

Art of Deception

Identity theft is on the brink of a startling surge, and distinguishing authenticity from deception is becoming increasingly challenging.

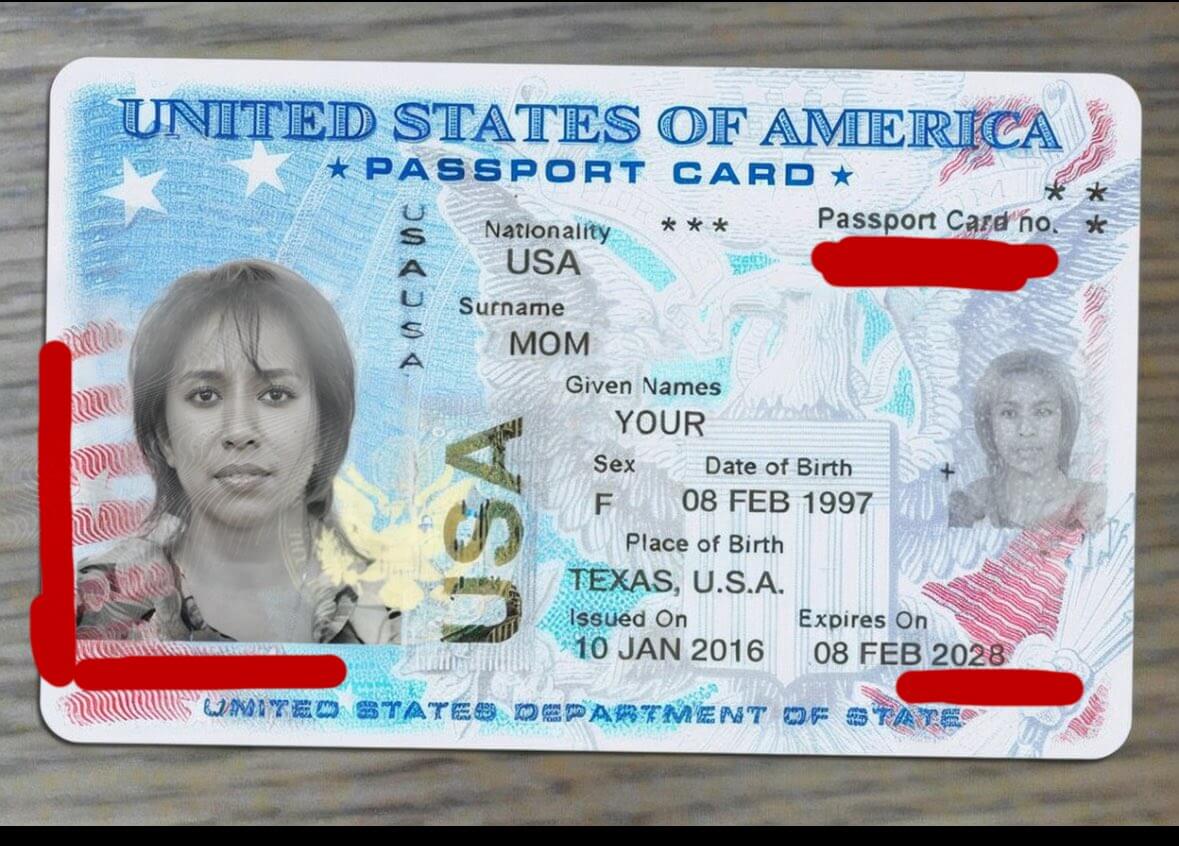

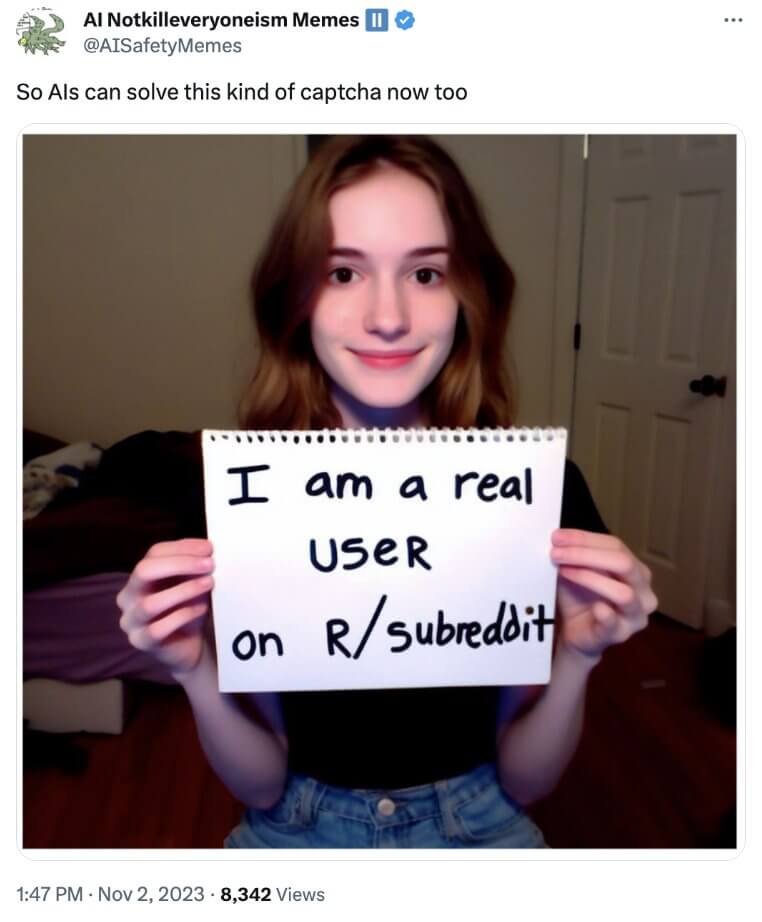

Take a look at these images1,2,3;

Can you tell if they’re authentic or fake?

Well, all of these images are AI generated, image designed to trick first-level verification systems, a staple in many financial institutions and KYC protocols.

Now lets look at what this reddit user did

The first image was created by a reddit user u/harsh and was shared on r/StableDiffusion. The rest are thanks to u/harsh’s inspiration.

Workflow - Fully Open Source4

1. Face Generation with LoRAs

LoRAs, or Low-Rank Adaptations, are a technique used in AI image generation, particularly with models like Stable Diffusion. They serve as a way to fine-tune these models by introducing new concepts or styles into the generation process. This makes them useful for generating images with particular styles or subjects with greater fidelity. You can checkout civitai for some examples.

Begin by generating a set of faces without using LoRAs. To enhance the chosen image, apply a combination of LoRAs. For instanse, mix a lower quality face LoRA you’ve trained with a small proportion (around 0.3) of a high-quality celebrity LoRA. This blend can significantly improve the variety and quality of the outputs.

2. Refining the Face

Reuse the seed from your chosen image and refine the face with the combined LoRAs and a control net. The control net allows for more detailed adjustments.

3. Adding Text to a Card

- Generate an image of blank paper using Stable Diffusion.

- Write the desired text on a physically textured paper.

- In Photoshop, overlay this paper as a ‘hard light’ layer over the generated blank paper, and clean up as needed.

Alternatively one can use tools like Calligrapher AI for automatic generation.

These images that you just saw were created on this week 06.01.2024, the picture below5 is from 11.02.2023. Incredible advancement in under three months.

Future Threats

The threat is clear: criminals are poised to exploit open-source models to craft flawless deepfakes of someone real or to create entire new personas for fraud.

However, the real issue isn’t just identity theft, it’s a problem thats going to cause massive headaches for everyone involved, from normal people to politicians. Once this level of quality translates to video generation, we’ll face an unparalleled crisis.

Even dead politicians are affected. The Flemish Christian Democrat Party, the CD&V used AI to bring their former PM back to life. Twitter (X) Post here. More info on VRT.be

Imagine a simalar fabricated picture or a video depicting your abduction sent to your parents, demanding ransom, all courtesy of AI technology. 👍

Banks might need to promptly bolster their security protocols and regulations to combat this threat, assuming they haven’t already done so.

As normal people, we might have to assume everything is fake by default. Much like the dilemma of deepfakes with revenge porn. And have figure out a way to verify the contents for authenticity.

Thats Not All

Imagine adding a fabricated or a cloned -your- voice to all the things mentioned above.

Prof. Ethan Mollick has an simple but amazing demonstration for this.6

This is a completely fake video of me. The AI (HeyGen) used 30 seconds of me talking to a webcam and 30 seconds of my voice, and now I have an avatar that I can make say anything. Don't trust your own eyes.

— Ethan Mollick (@emollick) January 5, 2024

Its not perfect, but a two minute recording would yield better results. pic.twitter.com/HkIooCo6Zu

Click on the tweet to see the full thread. He explains and shows the original images and voices with the fabricated/generated content. More info from Ethan, A quick and sobering guide to cloning yourself and AI is Already Altering the Truth

A similar thread on how to create fabricated videos and voice was shared by @LinusEkenstam, Make an Instant AI Avatars (Clones) for free and Animate-Anyone

AI Influencer’s

I’m not going to explains this, its something you should know

- theClueless AI - Company

- @lilmiquela - Only on Instagram

- Serenay - First in Turkey

Want to create it your on AI Influencer? Read This!!! by @emmanuel_2m

Comment form the dude that invented LoRA (Low-Rank Adaptations), Simo Ryu. Little did I realize year later thousands of deepfake waifu LoRAs would be flooding on the web…

We Already See its Problems

The imminent impact of AI on identity verification processes in Know-Your-Customer systems is undeniable. As AI progresses, it becomes easier and more economical to replicate someone’s likeness and identity markers, often acquired from data breaches. Consequently, infiltrating accounts, stealing funds, compromising data, and damaging brands will become simpler for malicious actors. And it’s already started;

- AI deepfakes are getting better at spoofing KYC verification — Binance exec

- New North America Fraud Statistics: Forced Verification and AI/Deepfake Cases Multiply at Alarming Rates

- Liveness tests used by banks to verify ID are ‘extremely vulnerable’ to deepfake attacks

- How Underground Groups Use Stolen Identities and Deepfakes

Just a short while ago, deceiving these systems was a formidable challenge. However, with the advancement of deepfakes and AI-generated personas, breaching these security measures is increasingly within reach.

The landscape of security and authenticity is rapidly shifting, and the window for relying on video verification systems may be closing sooner than we think.